Why pay $40k for competitive intel tools when you can build it for free with AI?

how I built this entirely inside Cursor that actually works and updates whenever I want (video tutorial + GitHub link added for DIY)

Here's the thing: every product marketer/product manager/founder been in this exact situation.

You need to analyze competitors for a launch or do a feature gap analysis. Your options? Spend hours finding the right prompts for doing deep research on 3 different tools, figuring out pages to crawl and find the most recent data, pay $40k for some enterprise AI tool that gives you generic reports + where you'll have to do entire stuff manually or... build your own AI system that actually works.

I chose option 3.

I got frustrated with jumping through 10 different solutions on ChatGPT/Claude/Perplexity/Gemini, Deep Research in everything was very verbose, it was just not working out. Also they hallucinate as they miss out on context due to token limitations in the chat interface.

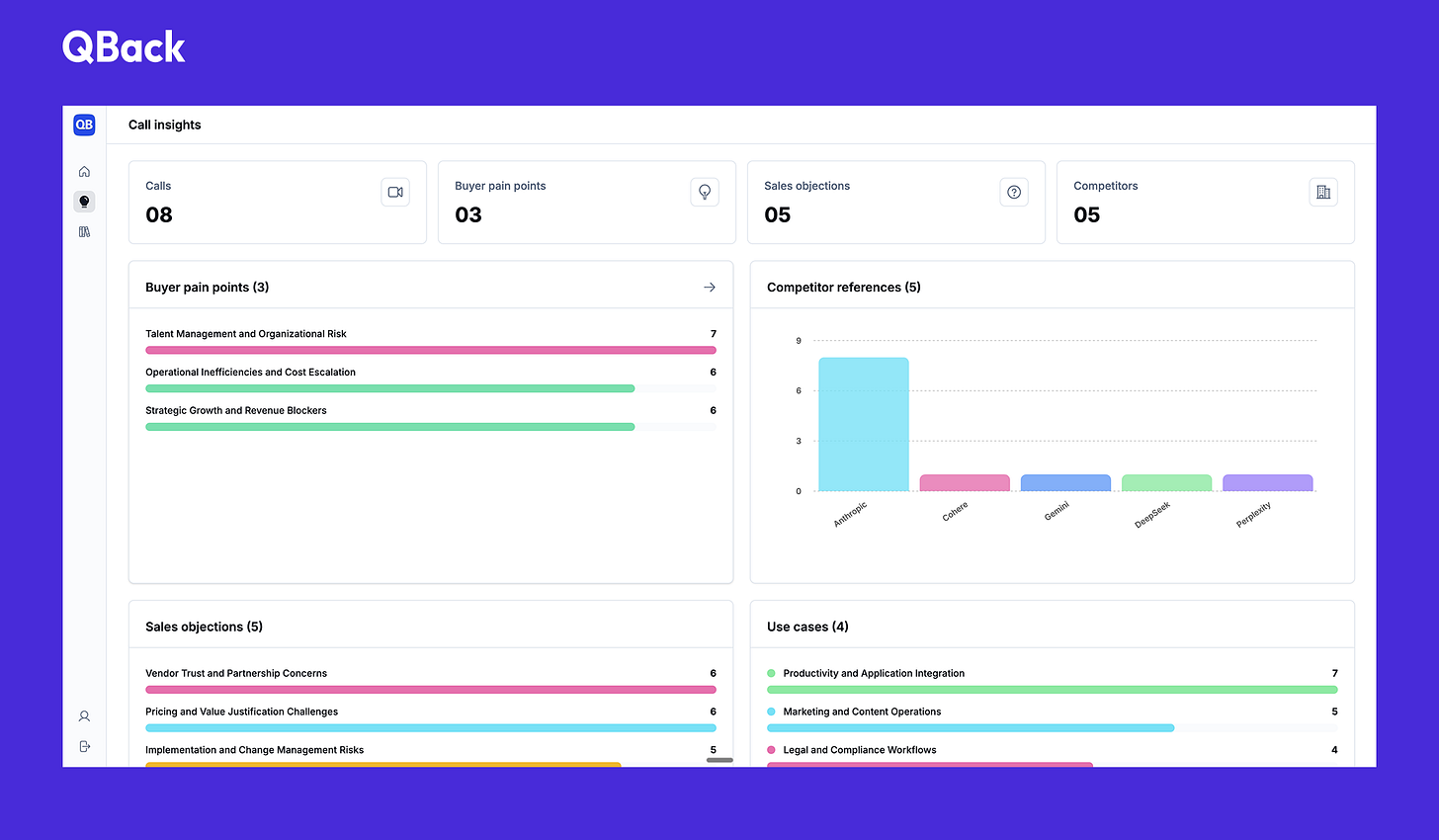

I generally do all my work inside Cursor, so I was like ok why not I do it here because I'm anyways using QBack to do buyer analysis, so I was looking for something which can help me do all the market analysis as well.

My entire setup of competitive intel: QBack for (buyer analysis) + this Cursor setup for doing any external competitor analysis.

The real problem (that everyone's missing)

Here's what I discovered after testing every competitive intel tool out there:

Most tools focus on finding information. But the real bottleneck is understanding information in YOUR context. Honestly, that's where most tools fail.

That too it should be super dynamic. Like I want to scrape the list of customers of my competitors, tomorrow I want to analyze their docs page for strengths and weaknesses.

You don't need another dashboard showing you what's already publicly available. You need a system that understands YOUR product + competitors + buyer, tracks changes that actually matter to YOUR GTM strategy, and gives you insights you can actually use in YOUR next launch or battlecard or sales asset or whatever.

how I actually solved this (the hard way)

I hacked together a system that works. But it wasn't easy.

The First Attempt (that failed): I tried to do it entirely inside Cursor using Beautiful Soup plus a basic crawler. I picked one competitor to test with—Databricks. It had 876 pages under documentation and it just went bonkers. The system couldn't handle the scale and I wasted 8-9 hours maxing out my limit in Cursor.

The Second Attempt (also failed): I switched to Replit and built a basic solution there. That was too shitty. It just didn't work because what I'm trying to build is complex—a lot of steps, a lot of logic, a lot of saving stuff into memory. I wanted it to be fluid, like water. But it wasn't.

The Third Attempt (that worked): It took me 2-3 days of thinking about the architecture, then I was able to build it end-to-end in roughly 4-5 hours. Tested it in every shape and form, saved the data, ran multiple tests. Finally, something that actually works.

The biggest struggle? finding a scraping engine that could handle the huge load.

That was the biggest challenge.

and tbh, the Crawl4AI scraper did a kickass job. The max I tested was to scrape 140 pages in one go and it did not disappoint at all.

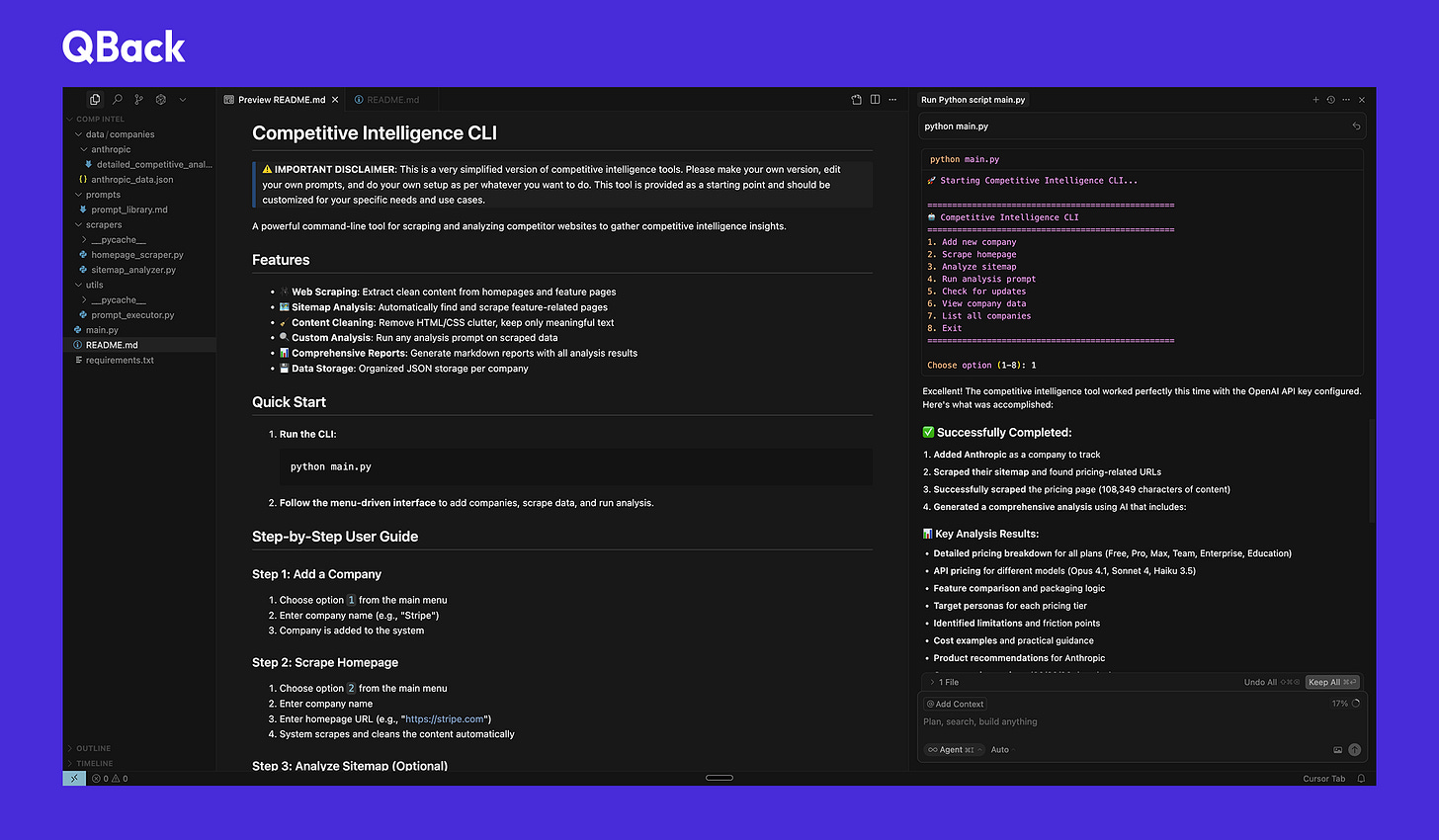

The Aha moment that made it work: When I created it as a very simple command line interface, it became super easy because the power was all under the user to input the prompts and everything.

The simple setup (that actually works)

Step 1: Input the URL You just give it a competitor's sitemap. The system sorts everything into product docs vs pricing vs homepage so you can pick what actually matters.

Step 2: Scraper magic I use Crawl4AI (an open source scraping engine that really helped me out). tbh, this was the hardest part to figure out.

Step 3: Everything stays in Cursor All the data gets saved right in your workspace. No external tools, no data leaks, no monthly subscriptions. Just pure, organized competitive intel. It's all yours.

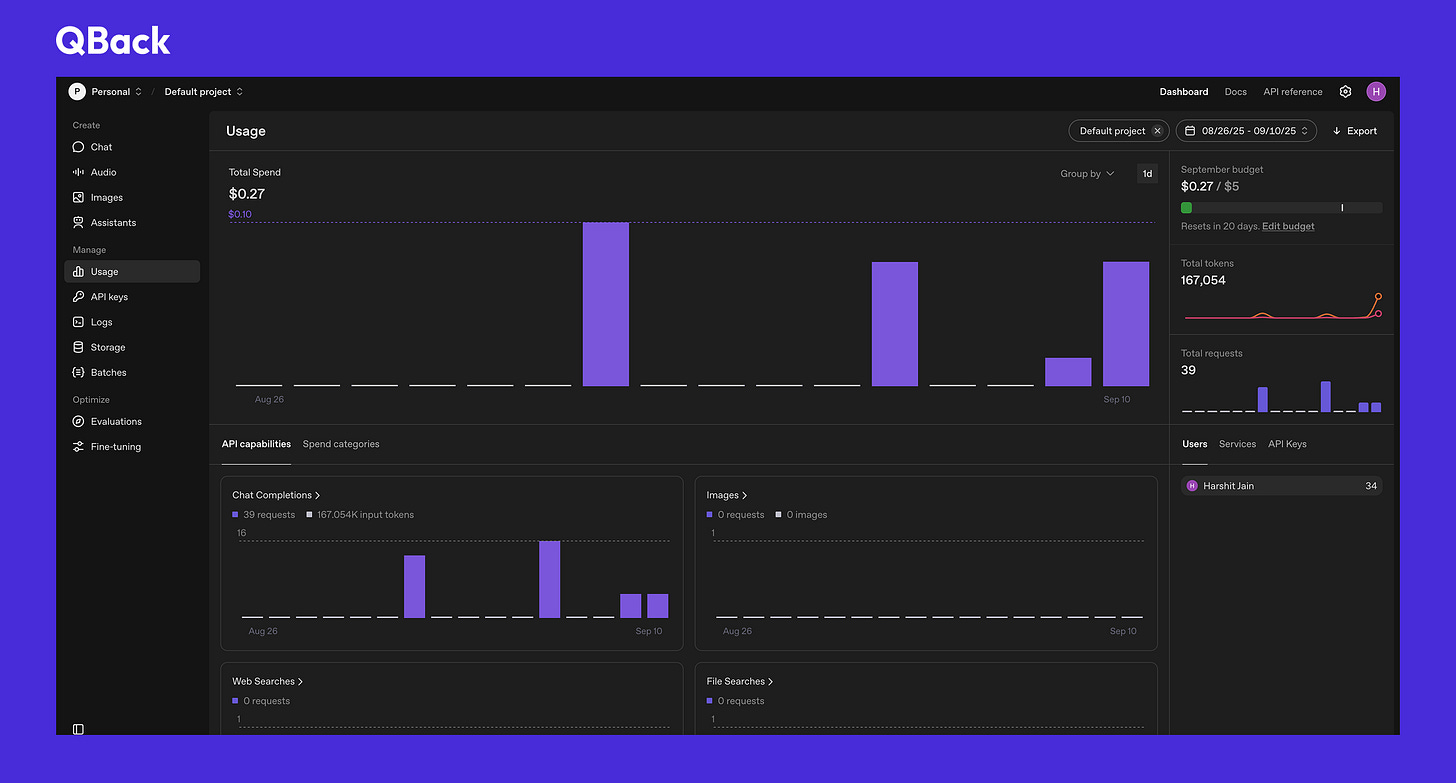

Step 4: The analysis engine This is where it gets interesting. I just dump the scraped data and run it through GPT-5 mini which actually analyzes what each competitor does well, where they're weak, and what gaps exist in the market.

Step 5: copy-paste ready insights You get company overviews, product analysis, and feature mapping that you can literally copy-paste into slide decks or share with your team. It all depends on what you want to scrape and what info you're looking for.

I do everything inside Cursor because it's just a .MD file and I can do quick updates whenever I want.

See it in action + here is the GitHub link

Watch me scrape and analyze Anthropic's pricing page in real-time using this setup

In this demo, I show how the system scrapes 140+ URLs in minutes, analyzes pricing and features automatically, and generates actionable insights for under $0.10 - all without leaving Cursor.

Btw, if you want to use QBack or you want to do that similar level of buyer analysis that I've shown in this video or this kind of a setup, you can either:

Just fill out this form and you’ll automatically receive the access.

DM me over linkedin or reply to this newsletter. I'll give you the access so you can do your own stuff and do the buyer analysis faster.

What it does for me?

Feature Tracking Made Easy: I can now easily figure out which features are in beta, which are in GA, what's coming soon, and what's being deprecated. This is super useful for product positioning and competitive analysis.

Documentation Deep Dives: Since this is coming from documentation, I get detailed feature specs, limitations, and implementation details that you'd never find in marketing material or deep research as the game is all context. I can share this info with my product marketing team for launches and relay it to product and engineering teams.

Real-Time Updates: I can track changes over time and see what competitors are actually building vs. what they're saying they're building.

The difference?

My system gets context because I have all the raw data directly from the horse's mouth. So insanely lesser chance of any hallucination or outdated data.

What I'd Do Differently Next Time?

If I had to do this again, I'd give myself more time for thinking and figuring out the context. I didn't think about scale initially. I thought I could rate limit it and figure it out, but even doing rate limiting won't help because I'll have to update this and I'll max out on requests.

I also thought about using 6-7 APIs under freemium services and keep switching them, but that's also a no-brainer because I wanted to do everything inside Cursor. Plus, that's just annoying to manage.

I think I'd have to evaluate the technical aspects more thoroughly, especially for doing things at scale.

The real insight: If you're a product marketer evaluating AI tools in the market, look for tools which are dynamic and should give right bang for your buck. By default, compare everything with GPT, Gemini, whatever you have. It should give for one input, it should give 10 high quality outputs. And it should be very dynamic to your business needs.

I've already called this out in one of my LinkedIn posts.

That's where the future is. Nobody wants to either drop the prices of these siloed solutions (which only do one use case) so that it matches the current rate of other AI stack, or they need to give way more output at that price point (whatever they are quoting today).

This was more for fun for me, so that's why I did it. But I think if people or product marketers who are evaluating tools in the market and are thinking of something AI, they should look for tools which are dynamic and should give right bang for your buck.

What's next?

Try scraping one competitor's documentation this week. See how much insight you can extract with just a few basic scripts.

I'm curious: What's your current competitive intelligence setup? Hit reply and share your approach.

P.S. If this resonated with you, forward it to your product marketing/competitive intel/founder friends who are tired of overpriced tools that underdeliver. And you want access to QBack, let me know.